Product Introduction

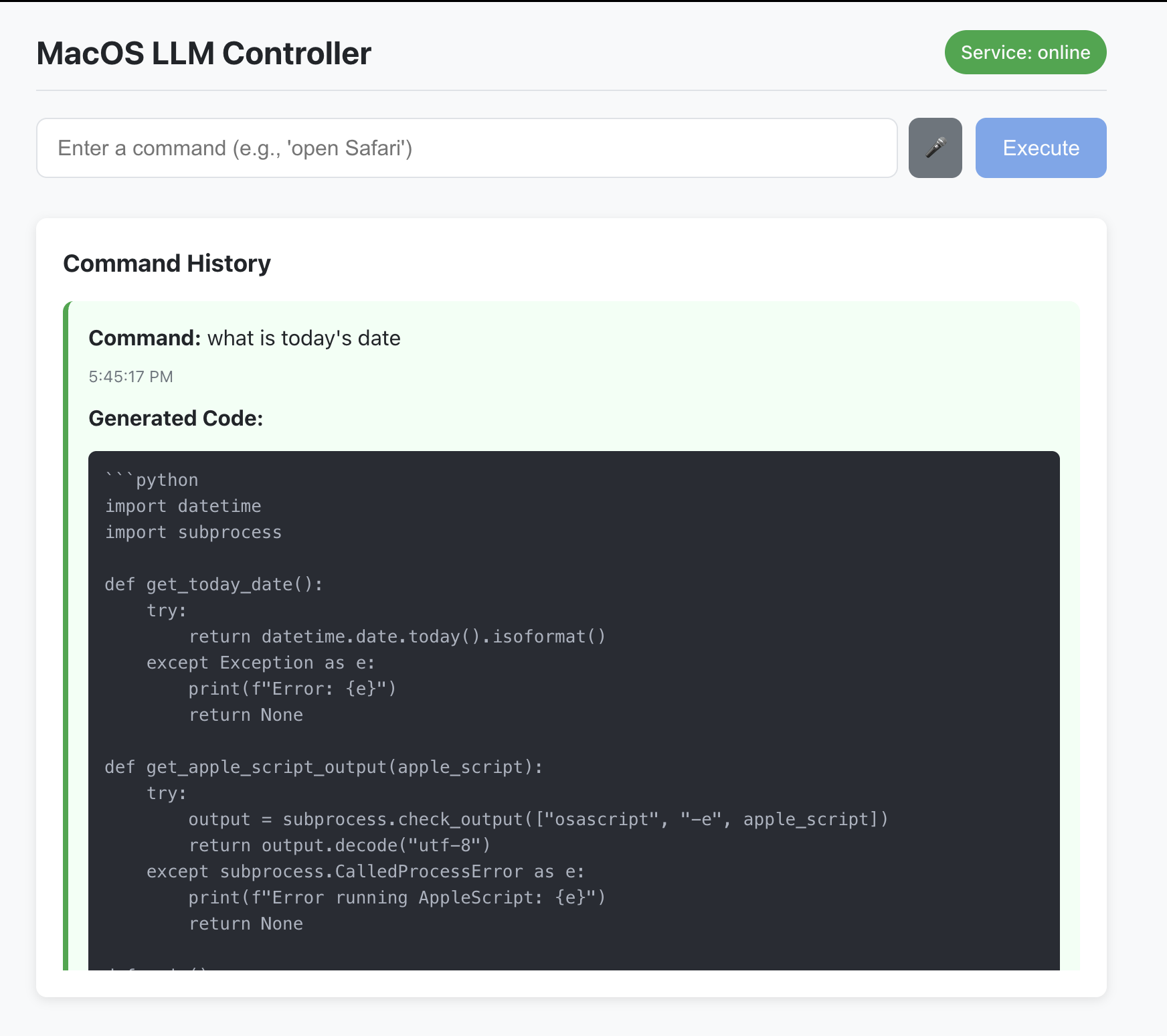

- The Llama MacOS Desktop Controller is a React and Flask-based application that translates natural language commands into executable macOS system actions using Python code generated by a Large Language Model (LLM). It bridges user intent with system-level operations through AI-driven code synthesis.

- The core value lies in enabling non-technical users to automate macOS tasks without scripting expertise, while offering developers a modular framework for integrating LLM capabilities into desktop control workflows.

Main Features

- The application processes natural language inputs (text or voice) and converts them into Python scripts that interact with macOS APIs, leveraging the Llama-3.2-3B-Instruct model for context-aware code generation.

- Real-time execution feedback is provided through a status dashboard that monitors backend connectivity, LlamaStack integration, and command success/failure states via HTTP response codes.

- A dual-layer safety system validates generated code through syntax checks and restricted macOS API access, preventing unauthorized system modifications while allowing approved automation tasks like file management or application control.

Problems Solved

- Eliminates the need for users to memorize Terminal commands or write Python scripts manually to perform routine macOS operations such as file organization, application launches, or system settings adjustments.

- Primarily targets productivity-focused macOS users, technical support teams managing multiple devices, and developers prototyping AI-assisted workflow automation tools.

- Enables scenarios like batch-renaming files via voice commands, automating repetitive system maintenance tasks through plain English descriptions, or creating custom shortcut sequences through conversational input.

Unique Advantages

- Unlike traditional automation tools requiring predefined scripts, this solution dynamically generates context-specific code using the Llama-3.2-3B model fine-tuned for macOS API interactions, ensuring adaptability to novel commands.

- Implements a proxy architecture that isolates LLM-generated code execution in a sandboxed Flask backend, maintaining system security while permitting authorized AppleScript and System Events API calls.

- Combines Ollama's local model serving with Flask-CORS managed API endpoints to create a privacy-preserving alternative to cloud-based automation services, keeping user data on-device during command processing.

Frequently Asked Questions (FAQ)

- How does the application ensure the safety of generated Python code? The backend performs static analysis for dangerous system calls, restricts execution to a predefined allowlist of macOS APIs, and runs code in a sandboxed environment with limited permissions.

- What macOS versions are supported? The controller requires macOS Catalina (10.15) or later due to dependencies on Python 3.8+ and modern System Events framework integrations.

- How can I troubleshoot failed command executions? The Flask backend logs detailed error traces including LLM output validation failures, Python runtime errors, and macOS API permission issues, accessible through the server console.

- Can the voice input feature handle technical terminology? The SpeechRecognition API supports custom vocabulary injection, allowing domain-specific terms related to macOS system operations for improved transcription accuracy.

- What hardware requirements exist for local operation? Running the Llama-3.2-3B model locally via Ollama requires a minimum of 8GB RAM and an M1/M2 Apple Silicon chip for optimal performance.