Product Introduction

- Siloam AI (alpha) is an observability and monitoring tool designed specifically for tracking and analyzing Large Language Model (LLM) usage across applications. It provides centralized visibility into API calls, logs, and performance metrics to help teams debug, optimize, and improve their LLM-powered products.

- The core value of Siloam AI lies in its ability to deliver real-time monitoring, anomaly detection, and cost-efficient analytics tailored for LLM workflows, ensuring reliability and actionable insights for developers and businesses of all sizes.

Main Features

- Live Request Monitoring: Siloam AI captures every LLM API call in real time without sampling or delays, providing full visibility into model usage, token consumption, and response latency. This feature supports immediate debugging and performance tracking across models like GPT-4.

- Smart Detection (Beta): The platform automatically flags anomalies, inconsistencies, and potential hallucinations in LLM responses using AI-powered validation. This beta feature helps preemptively identify issues before they impact end users.

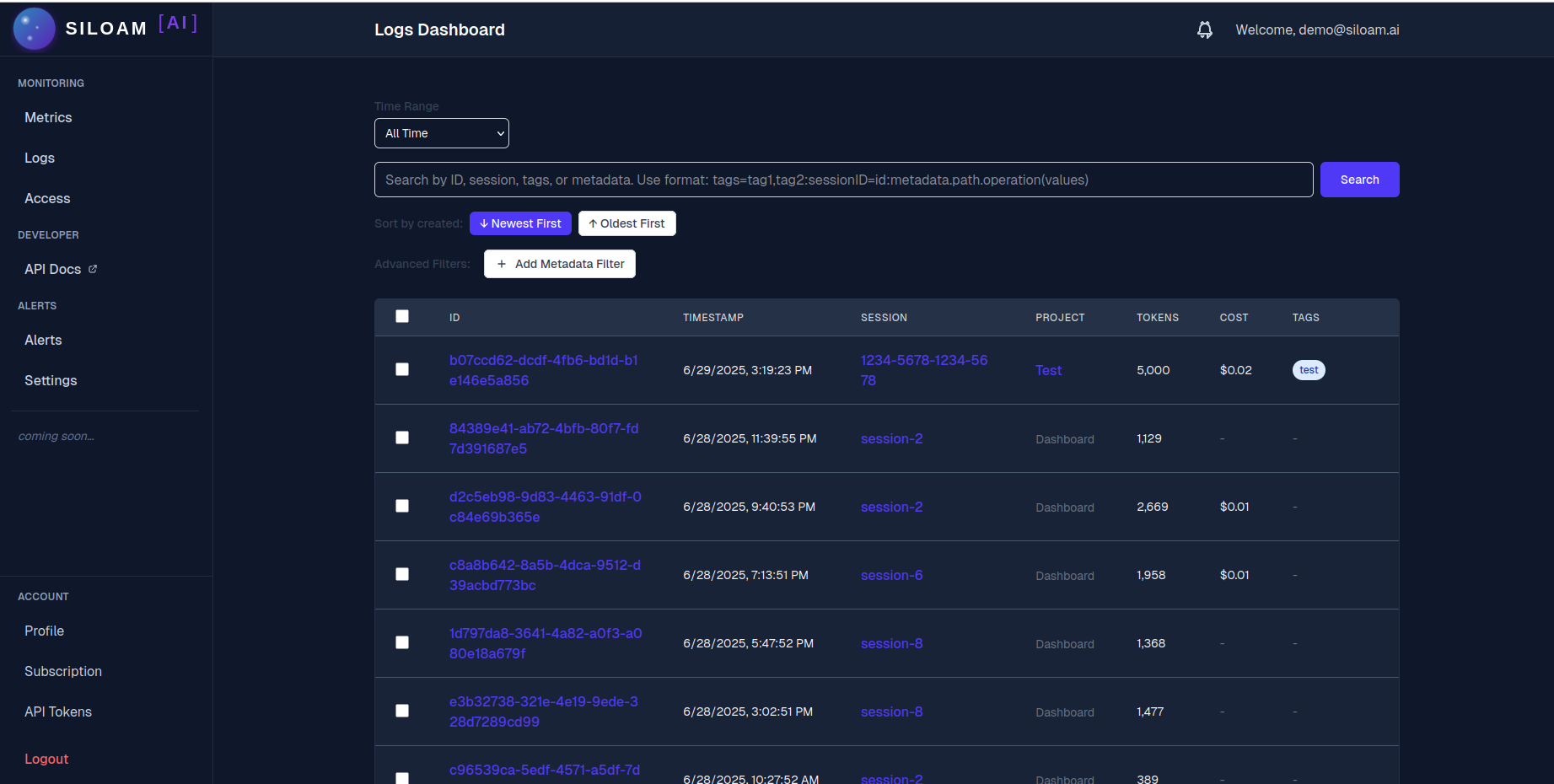

- Lightning Search and Analytics: Siloam AI enables millisecond-level search across millions of logs with filters for model type, user sessions, error types, or custom tags. Pattern detection algorithms highlight usage trends and cost outliers for proactive optimization.

Problems Solved

- Centralized LLM Observability: Developers often struggle to monitor fragmented LLM usage across applications, leading to blind spots in performance, costs, and errors. Siloam AI unifies all LLM traffic into a single dashboard.

- Target User Groups: The tool is designed for engineering teams, product managers, and AI startups building LLM-driven applications that require granular insights into model behavior and operational efficiency.

- Typical Use Cases: Debugging unexpected model outputs, auditing token usage to reduce costs, detecting hallucination risks in production environments, and complying with internal AI governance policies.

Unique Advantages

- Real-Time Data Without Sampling: Unlike traditional monitoring tools, Siloam AI processes 100% of LLM traffic in real time, eliminating data gaps caused by sampling or delayed batch processing.

- AI-Powered Validation: The platform’s proprietary anomaly detection algorithms analyze response coherence, consistency, and alignment with expected patterns, offering a layer of QA for LLM outputs.

- Developer-Centric Design: Siloam AI prioritizes ease of integration (via simple REST APIs), transparent pricing with no hidden fees, and scalable storage options, making it accessible for startups and enterprises alike.

Frequently Asked Questions (FAQ)

- How does Siloam AI handle data integration? Siloam AI provides REST API endpoints and prebuilt SDKs for seamless integration with existing LLM workflows, requiring only an API key and minimal code changes to start logging requests.

- Can Siloam AI analyze high-volume LLM traffic? The platform’s architecture is optimized for high throughput, supporting millions of logs with sub-millisecond search latency and configurable retention periods up to unlimited storage for enterprise plans.

- How accurate is the hallucination detection feature? The beta Smart Detection system uses ensemble models trained on diverse LLM outputs to identify potential hallucinations, with ongoing improvements based on user feedback and real-world data.

- What security measures are in place? All data is encrypted in transit and at rest, with role-based access control (RBAC) and optional on-premises deployment for enterprise customers requiring strict compliance.

- Is there a free tier for testing? Siloam AI offers a free plan with 1,000 monthly logs, 30-day retention, and basic analytics, allowing teams to evaluate the platform before upgrading to paid tiers.